Introduction Link to heading

NVIDIA’s Jetson Orin Nano promises impressive specs: 1024 CUDA cores, 32 Tensor Cores, and 40 TOPS of INT8 compute performance packed into a compact, power-efficient edge device. On paper, it looks like a capable platform for running Large Language Models locally. But there’s a catch—one that reveals a fundamental tension in modern edge AI hardware design.

After running 66 inference tests across seven different language models ranging from 0.5B to 5.4B parameters, I discovered something counterintuitive: the device’s computational muscle sits largely idle during single-stream LLM inference. The bottleneck isn’t computation—it’s memory bandwidth. This isn’t just a quirk of one device; it’s a fundamental characteristic of single-user, autoregressive token generation on edge hardware—a reality that shapes how we should approach local LLM deployment.

The Hardware: What We’re Working With Link to heading

The NVIDIA Jetson Orin Nano 8GB I tested features:

- GPU: NVIDIA Ampere architecture with 1024 CUDA cores and 32 Tensor Cores

- Compute Performance: 40 TOPS (INT8), 10 TFLOPS (FP16), 5 TFLOPS (FP32)

- Memory: 8GB LPDDR5 unified memory with 68 GB/s bandwidth

- Available VRAM: Approximately 5.2GB after OS overhead

- CPU: 6-core ARM Cortex-A78AE (ARMv8.2, 64-bit)

- TDP: 7-25W configurable

The unified memory architecture is a double-edged sword: CPU and GPU share the same physical memory pool, which eliminates PCIe transfer overhead but also means you’re working with just 5.2GB of usable VRAM after the OS takes its share. This constraint shapes everything about LLM deployment on this device.

Testing Methodology Link to heading

The Models Link to heading

I tested seven models ranging from 0.5B to 5.4B parameters—essentially the entire practical deployment range for this hardware. The selection covered two inference backends (Ollama and vLLM) and various quantization strategies:

Ollama-served models (with quantization):

- Gemma 3 1B (Q4_K_M, 815MB)

- Gemma 3n E2B (Q4_K_M, 3.5GB, 5.44B total params, 2B effective)

- Qwen 2.5 0.5B (Q4_K_M, 350MB)

- Qwen 3 0.6B (FP8, 600MB)

vLLM-served models (minimal/no quantization):

- google/gemma-3-1b-it (FP16, 2GB)

- Qwen/Qwen2.5-0.5B-Instruct (FP16, 1GB)

- Qwen/Qwen3-0.6B-FP8 (FP8, 600MB)

The Testing Process Link to heading

Each model faced 10-12 prompts of varying complexity—from simple arithmetic to technical explanations about LLMs themselves. All tests ran with batch size = 1, simulating a single user interacting with a local chatbot—the typical edge deployment scenario. Out of 84 planned tests, 66 completed successfully (78.6% success rate). The failures? Mostly out-of-memory crashes on larger models and occasional inference engine instability.

Understanding the Limits: Roofline Analysis Link to heading

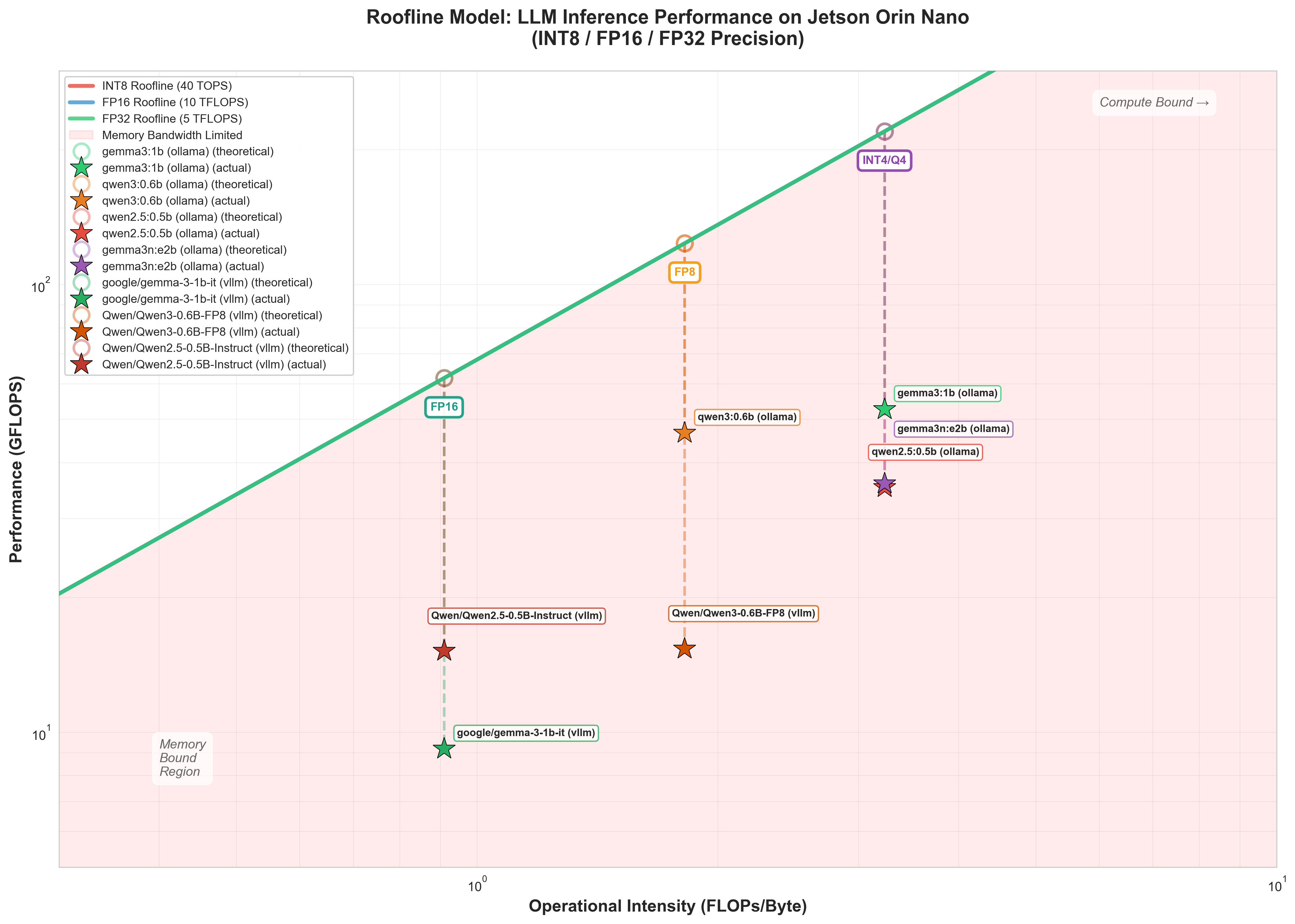

To understand where performance hits its ceiling, I applied roofline analysis—a method that reveals whether a workload is compute-bound (limited by processing power) or memory-bound (limited by data transfer speed). For each model, I calculated:

- FLOPs per token: Approximately 2 × total_parameters (accounting for matrix multiplications in forward pass)

- Bytes per token: model_size × 1.1 (including 10% overhead for activations and KV cache)

- Operational Intensity (OI): FLOPs per token / Bytes per token

- Theoretical performance: min(compute_limit, bandwidth_limit)

The roofline model works by comparing a workload’s operational intensity (how many calculations you do per byte of data moved) against the device’s balance point. If your operational intensity is too low, you’re bottlenecked by memory bandwidth—and as we’ll see, that’s exactly what happens with LLM inference.

The Results: Speed and Efficiency Link to heading

What Actually Runs Fast Link to heading

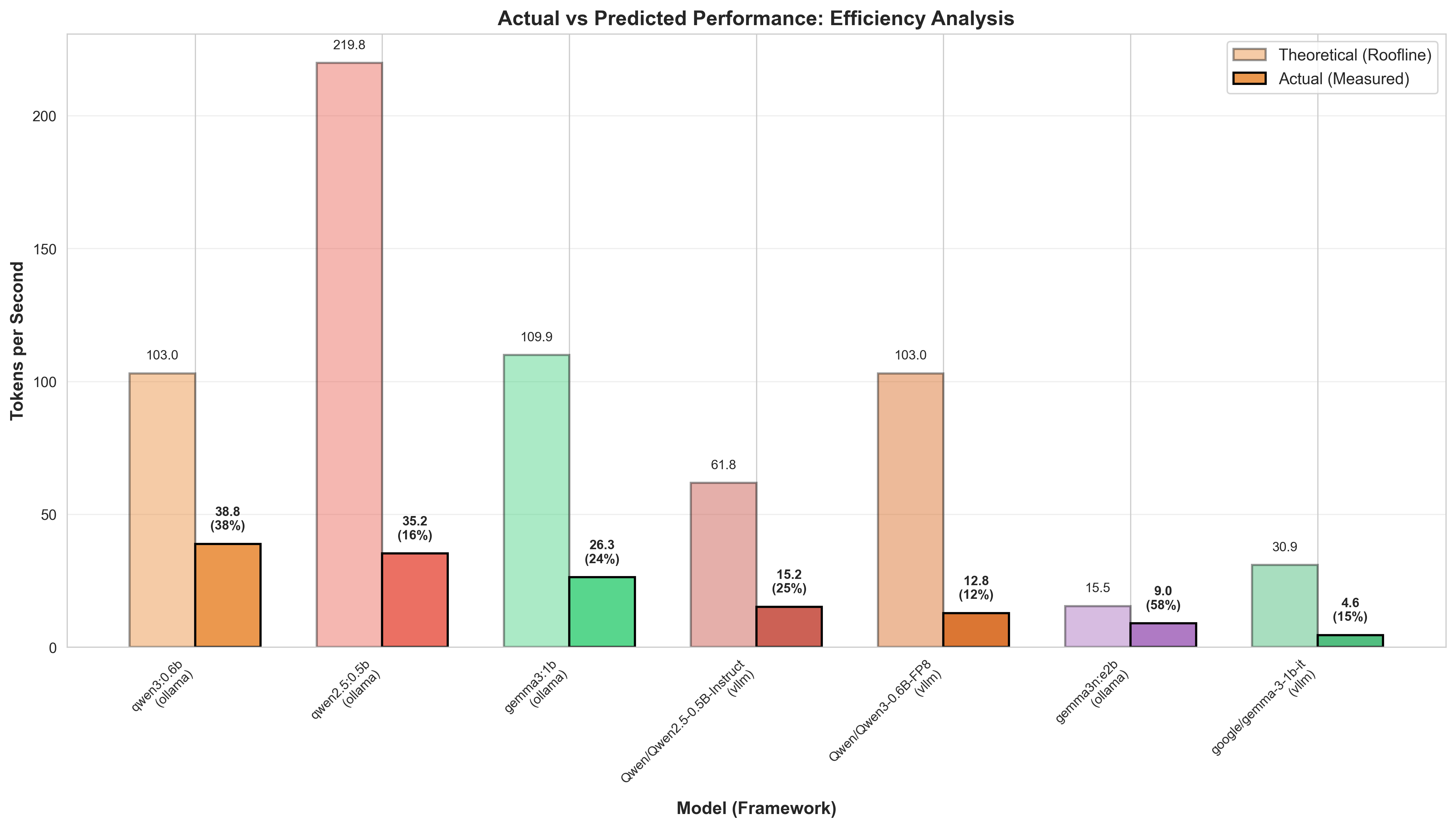

Here’s how the models ranked by token generation speed:

| Rank | Model | Backend | Avg Speed (t/s) | Std Dev | Success Rate |

|---|---|---|---|---|---|

| 1 | qwen3:0.6b | Ollama | 38.84 | 1.42 | 100% |

| 2 | qwen2.5:0.5b | Ollama | 35.24 | 2.72 | 100% |

| 3 | gemma3:1b | Ollama | 26.33 | 2.56 | 100% |

| 4 | Qwen/Qwen2.5-0.5B-Instruct | vLLM | 15.18 | 2.15 | 100% |

| 5 | Qwen/Qwen3-0.6B-FP8 | vLLM | 12.81 | 0.36 | 100% |

| 6 | gemma3n:e2b | Ollama | 8.98 | 1.22 | 100% |

| 7 | google/gemma-3-1b-it | vLLM | 4.59 | 1.52 | 100% |

The standout finding: quantized sub-1B models hit 25-40 tokens/second, with Ollama consistently outperforming vLLM by 2-6× thanks to aggressive quantization and edge-optimized execution. These numbers align well with independent benchmarks from NVIDIA’s Jetson AI Lab (Llama 3.2 3B at 27.7 t/s, SmolLM2 at 41 t/s), confirming this is typical performance for the hardware class.

Responsiveness: First Token Latency Link to heading

The time to generate the first output token—a critical metric for interactive applications—varied significantly:

- qwen3:0.6b (Ollama): 0.522 seconds

- gemma3:1b (Ollama): 1.000 seconds

- qwen2.5:0.5b (Ollama): 1.415 seconds

- gemma3n:e2b (Ollama): 1.998 seconds

Smaller, quantized models get to that first token faster—exactly what you want for a chatbot or interactive assistant where perceived responsiveness matters as much as raw throughput.

The Memory Bottleneck Revealed Link to heading

When I compared actual performance against theoretical limits, the results were striking:

| Model | Theoretical (t/s) | Actual (t/s) | Efficiency | Bottleneck | OI (FLOPs/byte) |

|---|---|---|---|---|---|

| gemma3:1b | 109.90 | 26.33 | 24.0% | Memory | 3.23 |

| qwen3:0.6b | 103.03 | 38.84 | 37.7% | Memory | 1.82 |

| qwen2.5:0.5b | 219.80 | 35.24 | 16.0% | Memory | 3.23 |

| gemma3n:e2b | 54.95 | 8.98 | 16.3% | Memory | 3.23 |

| google/gemma-3-1b-it | 30.91 | 4.59 | 14.9% | Memory | 0.91 |

| Qwen/Qwen3-0.6B-FP8 | 103.03 | 12.81 | 12.4% | Memory | 1.82 |

| Qwen/Qwen2.5-0.5B-Instruct | 61.82 | 15.18 | 24.6% | Memory | 0.91 |

Every single model is memory-bound in this single-stream inference scenario. Average hardware efficiency sits at just 20.8%—meaning the computational units spend most of their time waiting for data rather than crunching numbers. That advertised 40 TOPS? Largely untapped when generating one token at a time for a single user.

What This Actually Means Link to heading

Why Memory Bandwidth Dominates (in Single-Stream Inference) Link to heading

The roofline numbers tell a clear story: operational intensity ranges from 0.91 to 3.23 FLOPs/byte across all tested models during single-token generation (batch size = 1). To actually saturate those 1024 CUDA cores and hit compute-bound operation, you’d need an operational intensity around 147 FLOPs/byte at the device’s 68 GB/s memory bandwidth.

In practice, for a model to actually become compute-bound on this device during single-stream inference, it would need an operational intensity exceeding:

OI_threshold = Peak_Compute / Memory_Bandwidth

= (40 × 10^12 ops/s) / (68 × 10^9 bytes/s)

= 588 FLOPs/byte

Single-stream autoregressive decoding falls 100-600× short of this threshold because each token generation requires loading the entire model from memory (matrix-vector multiplication) while performing only ~2 FLOPs per parameter. The compute units are idle most of the time, simply waiting for model weights and activations to arrive from memory.

Note: Production LLM serving with large batch sizes (32-256 requests) changes this dynamic dramatically—batching transforms matrix-vector operations into matrix-matrix multiplications, increasing operational intensity by 30-250× and making workloads compute-bound. However, edge devices serving single users cannot exploit this optimization.

The largest model tested—gemma3n:e2b at 3.5GB quantized (5.44B total parameters, 2B effective)—shows only 16.3% efficiency, similar to other quantized models. Despite being the largest model, Q4_K_M quantization keeps its memory footprint manageable, resulting in similar operational intensity (3.23 FLOPs/byte) to the other INT4-quantized models. Its MatFormer architecture with selective parameter activation (only 2B of 5.44B params active per token) actually helps reduce memory traffic, though this benefit is partially offset by the overhead of routing logic.

What This Means for Edge Deployment Link to heading

The performance gap between Ollama and vLLM (2.3-5.7×) tells us something important about optimization priorities for single-user edge devices:

Qwen 2.5 0.5B: Ollama (Q4_K_M, 350MB) at 35.24 t/s vs vLLM (FP16, 1GB) at 15.18 t/s—2.32× faster Qwen 3 0.6B: Ollama (FP8) at 38.84 t/s vs vLLM (FP8) at 12.81 t/s—3.03× faster despite identical quantization Gemma 3 1B: Ollama (Q4_K_M, 815MB) at 26.33 t/s vs vLLM (FP16, 2GB) at 4.59 t/s—5.74× faster

In single-stream scenarios, quantization delivers near-linear performance gains by directly attacking the memory bandwidth bottleneck. Q4_K_M quantization (4.5 bits/parameter) hits a sweet spot between model quality and speed. Going lower to INT2 might help further, but you’ll need to carefully evaluate output quality.

The real insight: Ollama’s edge-first design philosophy (GGUF format, streamlined execution, optimized kernels from llama.cpp) is fundamentally better aligned with single-stream, memory-constrained edge scenarios. vLLM’s datacenter features—continuous batching, PagedAttention, tensor parallelism—add overhead without providing benefits when serving individual users on unified memory architectures. These features shine in multi-user production serving where batching can be exploited, but hurt performance in the single-stream case.

What you should actually do: Stick with Ollama or TensorRT-LLM using Q4_K_M/INT4 quantized models in GGUF format. Target the 0.5-1B parameter range (under 3GB) to leave headroom for KV cache. Focus your optimization efforts on memory access patterns and bandwidth reduction. Watch for emerging techniques like INT4 AWQ, sparse attention, and quantized KV caches.

Room for Improvement Link to heading

The 20.8% average efficiency might sound terrible, but it’s actually typical for edge AI devices running single-stream inference. Datacenter GPUs hit 60-80% efficiency on optimized workloads—but that’s typically with large batch sizes that increase operational intensity. In comparable single-stream scenarios, even high-end GPUs see similar efficiency drops. Edge devices commonly land in the 15-40% range due to architectural tradeoffs and memory bandwidth constraints relative to their compute capability.

Three factors explain the gap:

- Architecture: Unified memory sacrifices bandwidth for integration simplicity. The 4MB L2 cache and 7-15W TDP limit further constrain performance.

- Software maturity: Edge inference frameworks lag behind their datacenter counterparts in optimization.

- Runtime overhead: Quantization/dequantization operations, Python abstractions, and non-optimized kernels all add up.

The consistent 16-24% efficiency across most models suggests there’s room for 2-3× speedups through better software optimization—particularly in memory access patterns and kernel implementations. But fundamental performance leaps will require hardware changes—specifically, prioritizing memory bandwidth (200+ GB/s) over raw compute capability in future edge AI chips.

Where to Go From Here Link to heading

Software Optimizations Worth Pursuing Link to heading

- Optimize memory access patterns in attention and MLP kernels

- Implement quantized KV cache (8-bit or lower)

- Tune for small batch sizes (2-4) to improve memory bus utilization

- Overlap CPU-GPU pipeline operations to hide latency

Research Directions Link to heading

- Architectures with higher operational intensity (fewer memory accesses per compute operation)

- Sparse attention patterns to reduce memory movement

- On-device LoRA fine-tuning with frozen, quantized base weights

- Multi-model serving with shared base model weights

What Edge AI Hardware Designers Should Focus On Link to heading

Future edge AI devices optimized for local, single-user LLM inference need a fundamental shift in priorities: memory bandwidth over raw compute capability. Specifically:

- 200+ GB/s memory bandwidth (3× current Jetson Orin Nano)

- HBM integration for higher bandwidth density

- 16GB+ capacity to support 7B+ parameter models

- Purpose-built INT4/INT8 accelerators with larger on-chip caches to reduce DRAM traffic

References Link to heading

Williams, S., Waterman, A., & Patterson, D. (2009). “Roofline: An Insightful Visual Performance Model for Multicore Architectures.” Communications of the ACM, 52(4), 65-76.

NVIDIA Corporation. (2024). “Jetson Orin Nano Developer Kit Technical Specifications.” https://developer.nvidia.com/embedded/jetson-orin-nano-developer-kit

“Jetson AI Lab Benchmarks.” NVIDIA Jetson AI Lab. https://www.jetson-ai-lab.com/benchmarks.html

Gerganov, G., et al. (2023). “GGML - AI at the edge.” GitHub. https://github.com/ggerganov/ggml

Kwon, W., et al. (2023). “Efficient Memory Management for Large Language Model Serving with PagedAttention.” Proceedings of SOSP 2023.

Team, G., Mesnard, T., et al. (2025). “Gemma 3: Technical Report.” arXiv preprint arXiv:2503.19786v1. https://arxiv.org/html/2503.19786v1

Yang, A., et al. (2025). “Qwen3 Technical Report.” arXiv preprint arXiv:2505.09388. https://arxiv.org/pdf/2505.09388

DeepSeek-AI. (2025). “DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning.” arXiv preprint arXiv:2501.12948v1. https://arxiv.org/html/2501.12948v1

“Running LLMs with TensorRT-LLM on NVIDIA Jetson Orin Nano Super.” Collabnix. https://collabnix.com/running-llms-with-tensorrt-llm-on-nvidia-jetson-orin-nano-super/

Pope, R., et al. (2022). “Efficiently Scaling Transformer Inference.” Proceedings of MLSys 2022.

Frantar, E., et al. (2023). “GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers.” Proceedings of ICLR 2023.

Dettmers, T., et al. (2023). “QLoRA: Efficient Finetuning of Quantized LLMs.” Proceedings of NeurIPS 2023.

Lin, J., et al. (2023). “AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration.” arXiv preprint arXiv:2306.00978.